The Deepfake Dilemma: A Threat to Democracy and Personal Identity

In a world where technology evolves at a breakneck pace, the creation of synthetic replicas through AI is no longer confined to science fiction. This narrative takes shape with a striking example recently spotlighted by ABC News Verify, where a federal senator's voice was cloned with only around A$100 and her explicit permission. However, as technology becomes increasingly accessible, the potential misuse of deepfake and voice cloning apps poses a serious risk to democratic processes and personal identities.

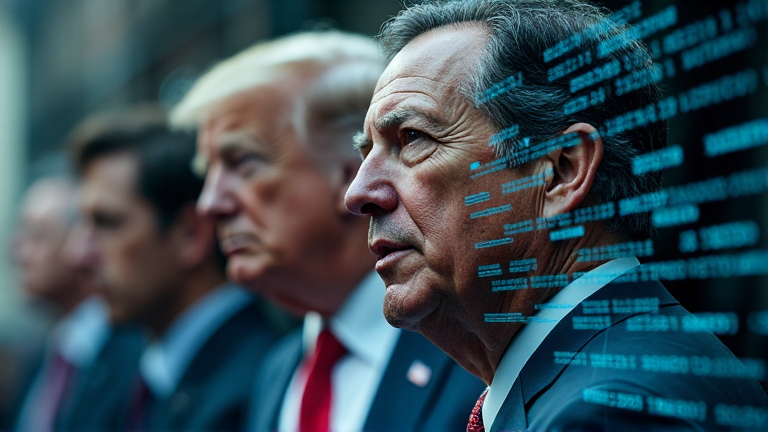

The Rise of Deepfakes and Their Implications

Deepfake technology now manufactures visuals and voices that closely mimic reality, blurring the line between what is genuine and what is fabricated. The technology's ability to convincingly simulate a person's likeness raises urgent concerns. Cases have shown that even when the public is exposed to AI-crafted replicas—such as the cloned voice of a prominent senator—many fail to initially recognize the deceptive nature of the content. This misperception can easily pave the way for misinformation and damage to individual reputations.

One notable incident took place in 2020 when a deepfake video featuring the then Queensland Premier falsely claimed that the state was "cooked" and drowning in "massive debt." Garnering nearly 1 million views on social media, the video underscored how convincingly fabricated material can influence public sentiment and undermine electoral integrity.

The Limitations of Existing Legal Frameworks

Australian law currently offers a patchwork of potential legal recourses against deepfakes, ranging from defamation and privacy laws to consumer protection measures and complaints lodged with the eSafety commissioner. Yet, these provisions fall short in addressing the unique challenges produced by AI-generated replicas.

For instance, while copyright law may protect a tangible, recorded delivery of a voice or image, it does not extend to the digital clone itself. A senator’s 90-second voice recording, produced by ABC for legitimate purposes, can be copyrighted as a sound recording, yet the cloned version—deemed authorless under current laws—remains unprotected. This legal gray area leaves individuals exposed to unauthorized uses of their identity.

Moral rights, such as the right of attribution and against false attribution, offer minimal shelter and do not cover the sprawling presence of deepfakes, which are emerging as increasingly compelling tools for deception.

The Concept of Personality Rights

Across many U.S. jurisdictions, the notion of "personality rights" or the right of publicity acknowledges the commercial value of a person's name, likeness, and voice. High-profile cases involving celebrities like Bette Midler and Johnny Carson have illustrated the power of these rights in preventing unauthorized commercial exploitation of personal identity.

In contrast, legal protection in Australia lags behind, with the debate centering on whether statutory publicity rights should be introduced. Critics argue that existing laws such as consumer protection and tort law only offer fragmented coverage and may not adequately address the unique challenges of AI-fueled identity misappropriation.

A Call for Urgent Legislative Action

As deepfakes become commonplace—especially during key political events like elections—legislators and policymakers face mounting pressure to fortify defenses against digital impersonation. The potential adoption of laws similar to the U.S. "No Fakes Bill," which aims to protect image and voice through strict intellectual property rights, could offer a promising avenue for Australia.

The growing prevalence of deepfake technology demands a serious reassessment of current legal norms. By establishing comprehensive personality rights, Australia could better safeguard its citizens' identities and ensure the integrity of its democratic processes.

The narrative is clear: as electoral campaigns approach and technology continues to blur the boundaries of authenticity, the implementation of robust legal protections is not just desirable—it is essential.

Note: This publication was rewritten using AI. The content was based on the original source linked above.